Apple AirPods Price in Pakistan (2026)|What’s Actually Worth Buying?

If you use earphones daily in Pakistan, you already know how important they’ve become. Calls, Zoom meetings, gym time, long drives, and YouTube before sleep, everything runs on audio now. Because of that, people don’t just want “good sound” anymore. They want something that connects fast, doesn’t drop calls, and doesn’t need fixing every other day. This is the reason Apple AirPods continue to maintain their popularity.

Not because they’re the loudest or the cheapest. For Apple users, they simply function flawlessly. You put them in, they connect, and you forget about them. That’s honestly their biggest strength.

In this post, I’m breaking down the Apple AirPods price in Pakistan, clearing up confusion about which models are actually available in 2026, and helping you decide which one makes sense for your use, not just on paper, but in real life.

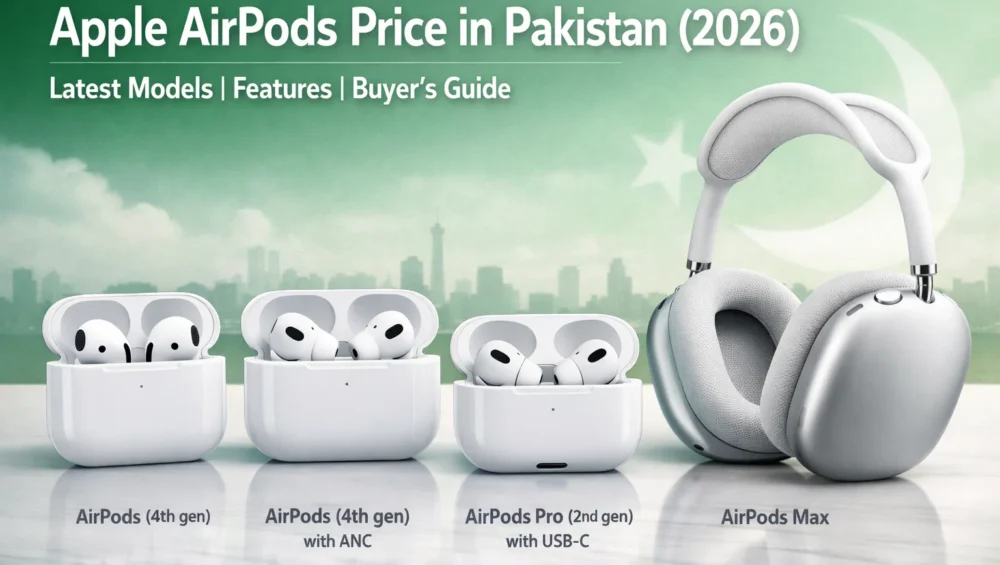

Apple AirPods Models Available in 2026

First things first, let’s clean up the confusion.

As of 2026, Apple’s AirPods lineup is actually basic. There are no hidden versions or secret upgrades. Apple currently sells only three main AirPods types, with one of them having two variants.

Currently, the official models are

- AirPods (4th generation)

- AirPods (4th generation) with Active Noise Cancellation

- AirPods Pro (2nd generation) with USB-C

- AirPods Max with USB-C.

AirPods (4th Generation)

The standard AirPods (4th generation) are what most people end up buying, and honestly, that makes sense.

They use an open-ear design, so they don’t block your ears completely. This makes them comfortable for long use. You can wear them for calls, meetings, or even hours of casual listening without feeling pressure in your ears.

Sound quality is clean and balanced. Nothing extreme. Voices sound clear, which matters a lot for calls and meetings. Pairing is instant if you’re using an iPhone, and switching between Apple devices happens automatically in the background.

If you just want reliable AirPods that you can use all day without thinking about settings or modes, this model does the job.

The AirPods (4th Generation)

Apple also sells a slightly upgraded version of the same AirPods (4th generation). Same shape. Same comfort. The difference is Active Noise Cancellation.

Now, let’s be real here. This is not the same noise cancellation you get on the Pro models. But it does help. Office noise, AC hum, background chatter—it tones all that down. You won’t feel completely cut off from the world, but distractions become less annoying. For people working in shared offices or cafés, this version makes sense.

If you like open-ear AirPods but want a bit more focus during the day, this variant is worth considering.

AirPods Pro (2nd Generation)

If you want proper noise cancellation, there’s no shortcut.

You go for AirPods Pro (2nd generation). These come with silicone ear tips that sit inside your ear and create a seal. That seal is what makes the noise cancellation actually work. Traffic noise, office chatter, and background buzz—all of it drops down noticeably.

Transparency mode is also excellent. When it’s on, voices sound natural, not metallic. You can talk to someone without pulling the earbuds out. Apple updated the charging case to USB-C, which is convenient if you’re already using newer Apple devices. Sound-wise, this is still Apple’s most complete earbuds package.

If you travel often or wear AirPods for long hours, this is the safest long-term choice.

AirPods Max with USB-C

AirPods Max are a different kind of product. They’re big. They’re heavy. And they’re expensive. But they sound excellent. If you prefer over-ear headphones and want immersive sound, these deliver. Noise cancellation is strong, comfort is solid, and the sound feels wide and detailed. They’re better suited for home, office work, or long listening sessions rather than daily commuting.

Apple now sells them with a USB-C charging port, keeping them in line with newer products.

Apple AirPods Price in Pakistan

Now let’s talk numbers.

The Apple AirPods price in Pakistan generally looks like this:

- AirPods (4th generation) PKR 38,999

- AirPods (4th generation) with Active Noise Cancellation PKR 50,499

- AirPods Pro (2nd generation) with USB-C PKR 62,999

- Apple AirPods 3 price starts at Rs.50,999 PKR

Prices can move up or down depending on stock and exchange rates, but Mega.pk is one of the more reliable places to check updated pricing and availability.

Which AirPods Should You Buy?

If your main use is calls, meetings, and casual listening, the regular AirPods (4th generation) are more than enough. They’re comfortable, reliable, and easy to live with.

If you work around constant background noise, the ANC version gives you a noticeable improvement without changing the fit.

The second-generation AirPods Pro are well worth the extra cost if you want true noise cancellation and intend to use them for extended periods of time.

And if you’re someone who values immersive sound and prefers over-ear headphones, AirPods Max are there just not for everyone.

Apple AirPods aren’t perfect, but they’re consistent. They connect fast, work smoothly with Apple devices, and don’t create daily headaches. That’s why people keep buying them.

If you understand the Apple AirPods price in Pakistan and know which models are actually available in 2026, choosing the right one becomes much easier. For genuine products and up-to-date prices, Mega.pk remains a solid option in Pakistan.

Apple’s Best Products: Not Just Apple AirPods

People know Apple for more than just the Apple AirPods. The brand has a strong reputation thanks to other high-quality products like:

What features do Apple AirPods Pro 3 offer according to Apple?

Apple describes the AirPods Pro 3 as offering what it calls the world’s best in-ear Active Noise Cancellation, along with features like heart rate sensing during workouts and adaptive audio. These additions are designed to deliver clearer calls and a more immersive listening experience compared to earlier AirPods models.

What is the price range of Apple AirPods in Pakistan?

In Pakistan, Apple AirPods start from PKR 38,999 for standard AirPods 4 up to around PKR 87,999 for the advanced AirPods Pro 3. Prices may vary by seller, stock, and exchange rate conditions.

Which Apple AirPods models support spatial audio and adaptive tuning?

According to Apple officials, models such as AirPods 3 and AirPods Pro 3 use Personalized Spatial Audio and Adaptive EQ to fine-tune sound based on how the earbuds sit in your ears, making music and audio feel more balanced and immersive.